There's been some hype behind the reveal of DirectX 12 with the new Windows 10, and Square Enix partnered up with Microsoft to show off the capabilities of the new API. The Luminous Studio within Square Enix have delivered a demo in the form of Witch: Chapter 0, showing the human emotion of a witch crying, which attempts to capture the real human emotion on screen. The results of the research will be added to the Luminous Studio Engine for future projects under the Square Enix umbrella. The demo in question used NVIDIA GeForce GTX TITAN X-4-way SLI on a PC to achieve the results that you can see here and below. It's also indicated that the Xbox One in the future will be able to benefit from the changes as well.

https://www.youtube.com/watch?v=rpDdOIZy-4k

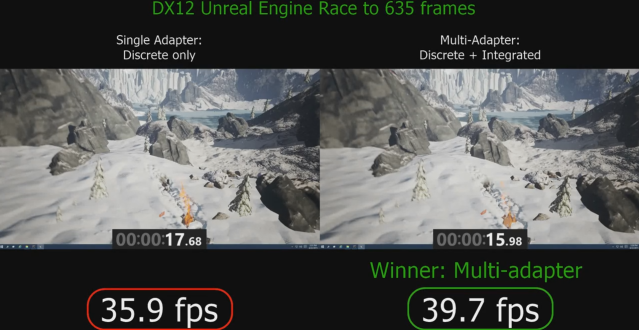

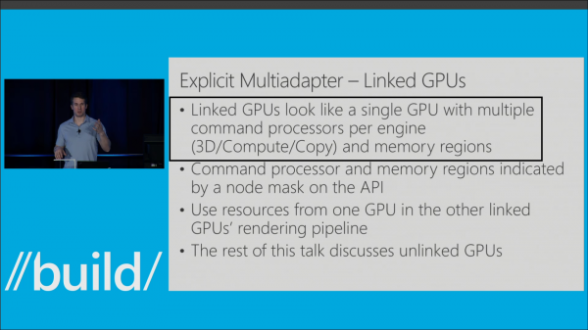

But what exactly has changed for the future of gaming here? Well, part of it is the API and it's ability to split work and use the hardware to its fullest potential for the developers in question. One of the new features that was unveiled is the heterogeneous multiadapter, which allows the system to split the rendering workload between the NVIDIA graphics card being used in the demo and the Intel integrated graphics chip. Now, one may wonder how much cross over there exists here for the idea of SLI and multiple graphics cards, and while the basic idea here is the same, it's the enhancements behind the surface that will really benefit the improvement of systems for years to come.

One of the issues regarding SLI and its implementation over the past years has to do with the frame buffer and the splitting of work between multiple graphics cards. You see, the thing was that even though you had multiple cards on your system that could handle the work, the memory usage between them was mirrored. What do I mean? Despite being able to do different set of works, both cards in question would have the same data (texture and geometry) on them in terms of the frames they needed to render. So despite the fact that you may have 2 4GB cards, that wouldn't necessarily mean you have an 8 GB buffer to work with, due to the mirroring needed. Due to parallel processing, you would be able to get through each segment with the mirrored memory faster as each card could skip already drawn sections, but the full potential in terms of them pipelining the work efficiently was definitely no where near optimized.

But with the resources within DirectX 12, a new concept emerges called Split Frame Rendering. In essence, developers can either use the resources within the API or code up an algorithm themselves to divide the data between the GPUs in question, meaning all the GPUs can work on individual parts of the screen, making the division of work more efficient and avoid needless checks and already drawn data. As the number of GPUs increased, the theory would be that the portion each GPU would need to render would decrease, making it much faster to draw each frame to the screen. That in turn would cause an increase in framerate for the end user. So basically, a higher framerate.

Are you excited for the future of gaming with these new improvements in framerate, or does it not make a difference as long as you have a fun game to play? For developers out there, do you plan on putting more effort into parallel processing algorithms after seeing the benefit at a demo such as this?