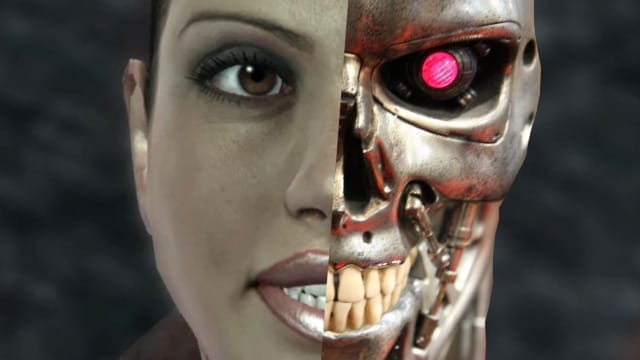

The Turing test, named after the pioneering computer scientist Alan Turing, remains an important philosophical and technical benchmark applied to the question of what exactly artificial intelligence could mean and how it could appear to us. In it's simplest form, if a program could hold its end of a conversation with a human being, convincing that person that it is too, a person, then that program could be meaningfully described as intelligent in some way. In a poetic reversal from speech to violence, the Botprize exists to ask whether or not a program can pass itself off as human by holding up its end of a deathmatch game in Unreal Tournament 2004.

The Botprize isn't new, the tournament was first held in 2008 during the IEEE Symposium on Computational Intelligence and Games in Perth Australia. When teams from universities across the world were invited to create a bot for Unreal Tournament 2004 which human judges would play against. Each team's entry was programmed to mimic the play style of a real player, with the goal of making them indistinguishable from their human controlled counterparts.

As of 2011 the Botprize judging protocol has been the following:

The system is based on a modification to the Link Gun. The primary mode is intended for tagging BOTs, while the secondary mode is for tagging HUMAN-controlled opponents. During the game, when a player believes he/she has identified an opponent as a BOT (respectively a HUMAN), he/she shoots the opponent using the primary (respectively secondary) mode of the Link Gun. The shot has no effect on the opponent, but the player will see a tag "BOT" (or "HUMAN") attached to the opponent to remind them of which opponents have been judged. If the player changes his/her mind, the opponent can be shot using the other mode to reverse the judgement, as often as he/she desires.This following video from the 2010 competition shows how a judge observed the CC-Bot2 before issuing an incorrect judgement, for which he was punished.

In order to pass the test and claim the grand prize a bot needed only to achieve a humanness rating-- calculated by the percentage of times that it was judged to be a real player--equal or higher than the median humanness rating assigned to the judges themselves. This proved elusive until 2012 when two bots managed it for the first time. AT the time New Scientist reported that the key strategies for successfully fooling the judges was, unsurprisingly, mimicry. Romanian born Mihai Polceanu's MirrorBot capitalized on mirroring and repeating the movements of it's human opponents. But curiously, the strategy was successful because it mimicked non-aggressive behaviors in moments where the player was not posing a threat.

My idea was to make the bot record other players at runtime instead of having a database of movements. This way, if the bot sees a non-violent player (shooting at the bot but around it, or shooting with a non-dangerous weapon) it would trigger a special behavior, mirroring. This makes the bot mimic another player in realtime, and therefore "borrowing" the humanness level.and Polceanu adds

Due to the lack of long-term memory and the realtime nature of the mirroring module, I was obliged to use classic graph navigation, which I customized in order to hide traces of bot-like movement such as the brief stops on navpoints, aiming behavior and elevator rides

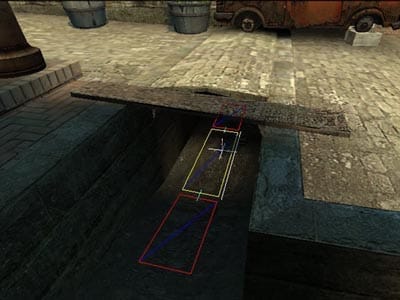

The other Prize winning team from 2012 used a particularly interesting strategy to avoid obvious bot-like behavior. They programmed the bot to observe and mimic behaviors displayed by human players, paying particular attention to movements dealing with the navigation of doorways, corners, and other tricky geometrical areas where a bot might give itself away by "Bouncing around." This approach to creating engaging A.I. can be seen in a more stripped down way in Valve's use of navigation meshes to inform NPC's of the different behaviors which they could use to navigate specific areas and obstacles. One can imagine how much the sense of of an intelligent NPC could be affected by allocating resources to mirroring behaviors.

As with MirrorBot, the University of Texas team's UT^Bot fooled the judged not by how well it performed in battle but by how it behaved between lulls in the violence. These behaviors which in the case of MirrorBot were interpreted as "social," suggest the how we judge the humanness of an NPC might have more to do with finding interesting noise in the otherwise clear signal of it's explicit actions. Look for example at the much celebrated A.I of Bioshock Infinite's character Elizabeth. Developers at 2K Marin have suggested that the most difficult thing about making her believable was deciding how she would move in specifically non-combat situations. Is it any surprise that 2k Marin sponsored the Botprize until 2012 and that the winning teams were invited to tour their Canberra studios?

After taking a hiatus in 2013 the Botprize returned last summer with an added judging protocol that enlisted a crowdsourced third person rating. The Humanness rating now includes data pertaining to how often a bot or player fooled an impartial spectator. MirrorBot took top prize again in 2014, achieving a humanness rating only three percentage points below that of the top scoring human player.

Anyone still running UT2004 can Download 2012's prize winning UT^Bot here. It might not be the most challenging opponent, but atleast you wont feel like you're playing the game by yourself.

Have a tip, or want to point out something we missed? Leave a Comment or e-mail us at tips@techraptor.net