Pathfinder War of Immortals Event Announced by Paizo

Paizo Inc. has announced Pathfinder War of Immortals, a widespread meta event covering multiple rulebooks, sourcebooks, and adventure paths.

April 17, 2024 | 04:35 EDT

Paizo Inc. has announced Pathfinder War of Immortals, a widespread meta event covering multiple rulebooks, sourcebooks, and adventure paths.

April 17, 2024 | 04:35 EDT

Wizards of the Coast President Cynthia Williams has announced she is stepping down from her position in the company at the end of this month.

April 17, 2024 | 03:01 EDT

Warlord Games and Osprey Games have announced Bolt Action: Third Edition, the latest edition of their World War II war game.

April 17, 2024 | 02:00 EDT

Slavic Magic has announced that there will be no Manor Lords roadmap, at least in the game's early stages, as the dev has opted to go down a different road.

April 17, 2024 | 11:27 EDT

nintondoDuring today's Nintendo Indie World showcase, we got a glimpse of some indies heading to the Nintendo Switch this year, as well as a surprise announcement.

April 17, 2024 | 10:47 EDT

Sony and Nixxes have revealed the Ghost of Tsushima PC system requirements, and its beauty will come at a cost for higher-end machines.

April 17, 2024 | 08:56 EDT

Take-Two Interactive, the parent company of studios like Rockstar and Gearbox, will lay off around 5% of its workforce and cancel several projects.

April 17, 2024 | 08:16 EDT

Board game developer FLYOS and acclaimed designer Maxime Tardiff have announced Rayman The Board Game, an official adaptation of the platformer series.

April 16, 2024 | 03:18 EDT

We got to hear from Amanda Hamon and Trystan Falcone, Senior Game Designer and Graphic Designer for Dungeons & Dragons 50th Anniversary Adventure Vecna: Eve of Ruin including adventure scope and special appearances

April 16, 2024 | 11:00 EDT

Niantic has announced a slew of Pokemon Go updates aimed at overhauling certain aspects of the game's experience, including visuals, avatars, and more.

April 16, 2024 | 10:31 EDT

Nintendo has announced a new Indie World showcase for tomorrow, and it'll feature around 20 minutes of info about upcoming Switch indies.

April 16, 2024 | 09:37 EDT

Ubisoft has issued a clarifying statement regarding Star Wars Outlaws' Jabba's Gambit mission, which has met with criticism for being Season Pass-exclusive.

April 16, 2024 | 09:15 EDT

Arrowhead has released another Helldivers 2 update to fix some annoying crashes, and the devs also have some choice words about datamined content.

April 16, 2024 | 08:38 EDT

Techland has revealed more information about the Dying Light 2 Nightmare Mode update, which will bring a new difficulty level this week.

April 15, 2024 | 11:50 EDT

A new Sand Land trailer has been released, and it harnesses the power of an iconic 2000s dance tune with a very famous five-note intro.

April 15, 2024 | 10:23 EDT

Epic has announced that Star Wars is making its way back to the world of Fortnite, and this time, Lego Fortnite and Fortnite Festival are involved too.

April 15, 2024 | 09:55 EDT

Magic's newest set, Outlaws of Thunder Junction, is here along with the Most Wanted Commander deck, so we have the Most Wanted review, and upgrade guide

April 13, 2024 | 10:00 EDT

Magnetic Press has announced an official Planet of the Apes TTRPG, featuring the Magnetic Variant of the beloved West End Games D6 system.

April 12, 2024 | 03:35 EDT

Marvel and NetEase have revealed a new map for the upcoming third-person hero shooter Marvel Rivals, and it's one for the Loki fans.

April 12, 2024 | 11:35 EDT

Viral indie hit Content Warning has crossed a major sales barrier, and its developers want you to submit your footage for a fun in-game initiative.

April 12, 2024 | 10:01 EDT

HoYoverse has announced that Genshin Impact version 4.6 will launch later this month, bringing a watery new region and terrifying Weekly Boss, plus more.

April 12, 2024 | 09:26 EDT

Nintendo has announced the SNES and Super Famicom games gracing the Nintendo Switch Online library this month, and there are some gems to enjoy.

April 12, 2024 | 08:45 EDT

Modiphius Entertainment has announced a new campaign, Against The Faerie Queen, for their Celtic-inspired TTRPG, Legends of Avallen.

April 11, 2024 | 03:21 EDT

Bethesda has revealed the release date for the upcoming Fallout 4 PS5 and Xbox Series X|S update, and it's arriving later this month.

April 11, 2024 | 12:08 EDT

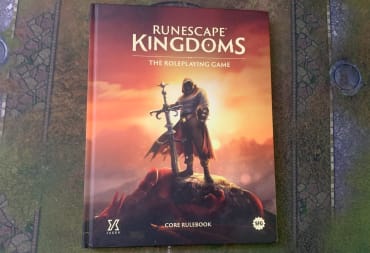

Runescape Kingdoms The Roleplaying Game isn't just another licensed RPG. It is a great entry point for people that want to get into the hobby.

April 11, 2024 | 12:00 EDT

Stunlock Studios has revealed exactly what you can expect in V Rising update 1.0, and it's looking like an absolutely massive update.

April 11, 2024 | 11:28 EDT

Amazon's Fallout TV show is finally out there in the wild, and to celebrate its release, a bevy of Fallout-themed goodies and updates are available.

April 11, 2024 | 10:54 EDT

Farming sim SunnySide, which boasts a focus on modernization and technology, now has an official release date on PC and consoles.

April 11, 2024 | 10:04 EDT

The Fallout TV show is incredible and is evidence of a formula to follow for any gaming adaptations in the future, just as The Last of Us did.

April 11, 2024 | 10:00 EDT

A new Pioneers of Pagonia update has arrived that introduces a co-op multiplayer mode to the cozy Settlers-style city-builder.

April 11, 2024 | 09:02 EDT

Five Nights at Freddy's movie studio Blumhouse has confirmed that a sequel to the wildly successful film is officially in the works.

April 11, 2024 | 08:39 EDT

Browser and mobile game publisher Miniclip has announced that it has acquired PowerWash Simulator and Peaky Blinders: Mastermind developer FuturLab.

April 11, 2024 | 08:07 EDT

The Twenty-Sided Tavern is a new interactive Stage Play Dungeons & Dragons experience opening in New York next week, we sat down with co-creators to learn what it's all about.

April 11, 2024 | 08:00 EDT

The Rogue Prince of Persia is a new Roguelike from the developers of Dead Cells expanded content and it's coming very soon to Steam Early Access.

April 10, 2024 | 01:45 EDT

Red Hook Studios has announced a massive new Darkest Dungeon 2 update that will bring a whole new campaign mode to the dungeon-crawling RPG.

April 10, 2024 | 01:22 EDT

Upcoming Wartales DLC The Tavern Opens! has a release date, and you'll be managing your very own drinkin' establishment next week.

April 10, 2024 | 11:59 EDT

EA and DICE have released the latest Battlefield 2042 roadmap, which takes us all the way up to the beginning of summer, but don't get your hopes up.

April 10, 2024 | 10:52 EDT

The Planet Crafter is a game that's about terraforming an alien world created by a team of two people. Read our The Planet Crafter Review to learn more!

April 10, 2024 | 10:24 EDT

Football, Tactics & Glory designer Andriy Kostiushko has announced Threads of War, a game inspired by the Ukraine war and made with his 11-year-old son.

April 10, 2024 | 09:07 EDT

Jonathan Nolan and Amazon Studios makes a fantastic introduction to the world of Bethesda's sci-fi RPG. This is our review of Fallout Season One.

April 10, 2024 | 09:00 EDT

Blizzard and NetEase have announced that Blizzard's games will return to mainland China under a new publishing agreement.

April 10, 2024 | 08:09 EDT

Darrington Press has released their Daggerheart 1.3 playtest material. It contains updated rules and sheets courtesy of player feedback.

April 9, 2024 | 05:37 EDT

Black Salt Games and movie production company Story Kitchen have announced a live-action Dredge movie. Yes, you read that right.

April 9, 2024 | 12:07 EDT

Creative Assembly has revealed the three Legendary Lords making their way to Total War: Warhammer 3 as part of its Thrones of Decay DLC.

April 9, 2024 | 11:24 EDT

Children of the Sun is an entertaining yet brief puzzle FPS where you aim to kill a cult leader. Ending too quickly Children of the Sun left us wanting for more.

April 9, 2024 | 11:00 EDT

EA has announced that Battlefield 2042 seasons will come to a close, and that Dead Space studio Motive has been added to the list of devs for the next game.

April 9, 2024 | 10:49 EDT

NetherRealm and Warner Bros have revealed the Mortal Kombat 1 Ermac release date, as well as giving us a first glimpse of the spectral fighter in action.

April 9, 2024 | 10:25 EDT

Arrowhead Game Studios has confirmed the return of the Helldivers 2 Automaton menace, and a new update has been deployed as well.

April 9, 2024 | 09:37 EDT

The Star Wars Outlaws release date has seemingly been leaked by none other than Ubisoft's very own Japanese arm ahead of a trailer debut later today.

April 9, 2024 | 09:12 EDT

The San Francisco wing of Gearbox Publishing has rebranded to Arc Games, and a new Hyper Light Breaker trailer is on the way soon.

April 9, 2024 | 08:52 EDT

April 11, 2024 | 11:19 EDT

April 10, 2024 | 10:16 EDT

April 10, 2024 | 10:16 EDT

April 10, 2024 | 10:16 EDT