As 2014 comes to an end one thing that the year will be remembered for is the unusually high number of credible fears expressed by technological and scientific luminaries about the real world dangers of the pursuit for artificial intelligence. The year saw Elon Musk compare the pursuit of A.I. to summoning a demon, that is, to creating something that would be beyond the creator’s ability to control.

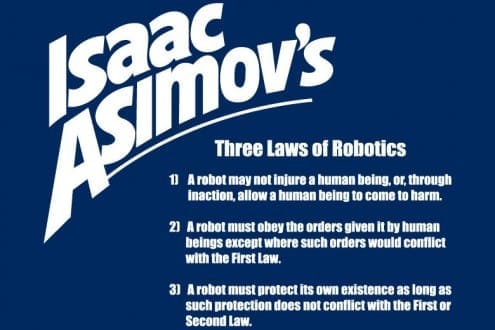

The year also saw Stephen Hawking—who has raised worries about a potential meeting with alien’s life forms in the past— echo Musk’s sentiment that the successful creation of A.I. poses the greatest existential threat to the continuation of the human species.The development of full artificial intelligence could spell the end of the human race. It would take off on its own, and re-design itself at an ever-increasing rate…Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded.Fears about A.I. are nothing new, of course. Our popular culture has well established long running narratives about human destruction at the hand of unruly machines. The idea of writing in a command failsafe so that machines would know to never harm humans goes back to Asimov in the 1940’s. So what is it that makes contemporary fear about A.I Unique? Well, it’s the fact that the technology is, according to some, advancing at a pace that will outstrip our ability to regulate or control it’s development. Musk himself has been quoted as saying that the danger point is only 5-10 years away (though he made this comment in a private exchange which was never meant to go public). Giving credence to these fears has been a recent report on the ‘Neural Turing Machine’ developed by DeepMind technologies.

According to New Scientist, the machine has proved itself capable of recognizing data patterns and generating its own methods for performing simple tasks, such as copying data blocks. In other words, the computer can program itself, albeit in an extremely rudimentary way. So how significant is this progress? In my opinion, not very. Well, at least not in terms of something to be afraid of.

The artificial neural net approach to developing A.I. is far from new, in concept or in practice, and while there have been small advances in the field there has to date been nothing, absolutely nothing, to indicate that we are anywhere near creating a real artificial intelligence. Hell, we don’t even know how our own brains create consciousness and I’m supposed to believe that we’re five years away from creating it in our software programming? Unless you believe that we’re going to stumble on it by complete accident, I simply don’t see how that’s possible. Even Ray Kurzweil, the A.I. pope, doesn't think it’s going to happen before 2045.

Kurzweil issued a rebuttal to Stephen Hawking in which he outlined that regulations around potentially harmful technologies have existed to curtail the dangers of development in the field of biotechnology ( a much greater threat to our survival if you ask me) and in other emerging scientific fields.

The theoretical danger of a superhuman consciousness evolving abilities far beyond those of its human creators is, of course, very real. But what the doomsayers always fail to present is a practical scenario in which a super A.I. overcomes the initial challenges of assuring its survival in a physical universe. That is, a scenario that doesn’t involved the maker conveniently supplying the means for it to both escape and house itself. Flawed as mankind may be, we are the product of millions of years of evolutionary forces that made us into creatures capable of surviving the hardships of life on the surface of the planet. Just because a super-intelligent piece of programming may emerge doesn’t mean that it will emerge with the capabilities of overcoming the very real problems of it’s own physical maintenance.

Computers break down, parts have to be replaced and re-built. Did the terminators movies ever put forth a really good case for how Skynet manages to destroy life on the surface of the earth without damaging itself? Doesn't the titular A.I. in Neuromancer need a human being to liberate it from its physical confines?

I’m far more worried about the very real dangers of sending automated war machines out into the world with soft-computing algorithms making decisions that spell life and death. My main point is while we have as much to fear from A.I. as we did from nuclear science we are nowhere near a ‘Manhattan project’. There is no imminent danger, at least not yet.

But that’s just my opinion. Perhaps you disagree.

According to New Scientist, the machine has proved itself capable of recognizing data patterns and generating its own methods for performing simple tasks, such as copying data blocks. In other words, the computer can program itself, albeit in an extremely rudimentary way. So how significant is this progress? In my opinion, not very. Well, at least not in terms of something to be afraid of.

The artificial neural net approach to developing A.I. is far from new, in concept or in practice, and while there have been small advances in the field there has to date been nothing, absolutely nothing, to indicate that we are anywhere near creating a real artificial intelligence. Hell, we don’t even know how our own brains create consciousness and I’m supposed to believe that we’re five years away from creating it in our software programming? Unless you believe that we’re going to stumble on it by complete accident, I simply don’t see how that’s possible. Even Ray Kurzweil, the A.I. pope, doesn't think it’s going to happen before 2045.

Kurzweil issued a rebuttal to Stephen Hawking in which he outlined that regulations around potentially harmful technologies have existed to curtail the dangers of development in the field of biotechnology ( a much greater threat to our survival if you ask me) and in other emerging scientific fields.

The theoretical danger of a superhuman consciousness evolving abilities far beyond those of its human creators is, of course, very real. But what the doomsayers always fail to present is a practical scenario in which a super A.I. overcomes the initial challenges of assuring its survival in a physical universe. That is, a scenario that doesn’t involved the maker conveniently supplying the means for it to both escape and house itself. Flawed as mankind may be, we are the product of millions of years of evolutionary forces that made us into creatures capable of surviving the hardships of life on the surface of the planet. Just because a super-intelligent piece of programming may emerge doesn’t mean that it will emerge with the capabilities of overcoming the very real problems of it’s own physical maintenance.

Computers break down, parts have to be replaced and re-built. Did the terminators movies ever put forth a really good case for how Skynet manages to destroy life on the surface of the earth without damaging itself? Doesn't the titular A.I. in Neuromancer need a human being to liberate it from its physical confines?

I’m far more worried about the very real dangers of sending automated war machines out into the world with soft-computing algorithms making decisions that spell life and death. My main point is while we have as much to fear from A.I. as we did from nuclear science we are nowhere near a ‘Manhattan project’. There is no imminent danger, at least not yet.

But that’s just my opinion. Perhaps you disagree.Have a tip, or want to point out something we missed? Leave a Comment or e-mail us at tips@techraptor.net